The Death of Moore's Law and the Birth of the AI Factory at Nvidia’s 2025 GTC Conference

Key announcements from Nvidia’s GTC keynote.

Delivering the NVIDIA GTC keynote in Washington DC on October 28th, 2025, CEO Jensen Huang presented a compelling case that maintaining AI's exponential growth will require a re-architecture, coining the idea of "AI Factory" as the necessary infrastructure to deliver a 10x cost reduction in generated tokens. Huang positioned Extreme Co-Design and the rise of agentic AI workers as the new mandate for every technology leader.

Photo: Shashi Bellamkonda

Huang suggested that we have relied on Moore's Law to make the price/performance of integrated circuits double every two years. The principle of Dennard Scaling states that simply packing more transistors onto a chip made them more powerful without a proportional increase in power consumption. Feeding the insatiable demand for ever faster and more energy efficient compute to power these AI factories without exploding build costs further is the foundation of Huang’s proposed Extreme Co-Design.

Huang’s AI Factory concept suggests the criticality of codesign with the goal of optimizing infrastructure across multiple layers simultaneously to force the 10x performance improvements needed while lowering costs.

- Compute (GPU, CPU, ASIC)

- The software (CUDA, libraries, frameworks)

- The system (server racks, cooling, power distribution)

- The network (interconnects between GPUs and systems)

This may distinguish Nvidia, as the company produces and operates across most of the stack, not just GPUs.

These are the announcements that people were really talking about at the Nvidia GTC conference:

- NVQLink is an open system architecture and high-speed interconnect designed to directly connect fragile quantum processors (QPUs) with powerful NVIDIA GPU supercomputers.

- Spectrum X Ethernet is an AI ethernet solution specifically designed to enable high-performance scaling of AI supercomputers, addressing the limits of standard ethernet. It is designed to ensure that all the high-powered processors in the AI racks can communicate nearly simultaneously while reducing network latency.

- NVIDIA and Nokia are collaborating on Aerial RAN Computer Pro (ARC-Pro), a 6G-ready accelerated computing platform built from the Grace CPU, Blackwell GPU, and Mellanox ConnectX networking, designed for telecommunications infrastructure. This partnership represents NVIDIA's broader effort to bring domestically produced 6G telecommunications infrastructure to the United States, supporting both advanced wireless capabilities and next-generation industrial applications.

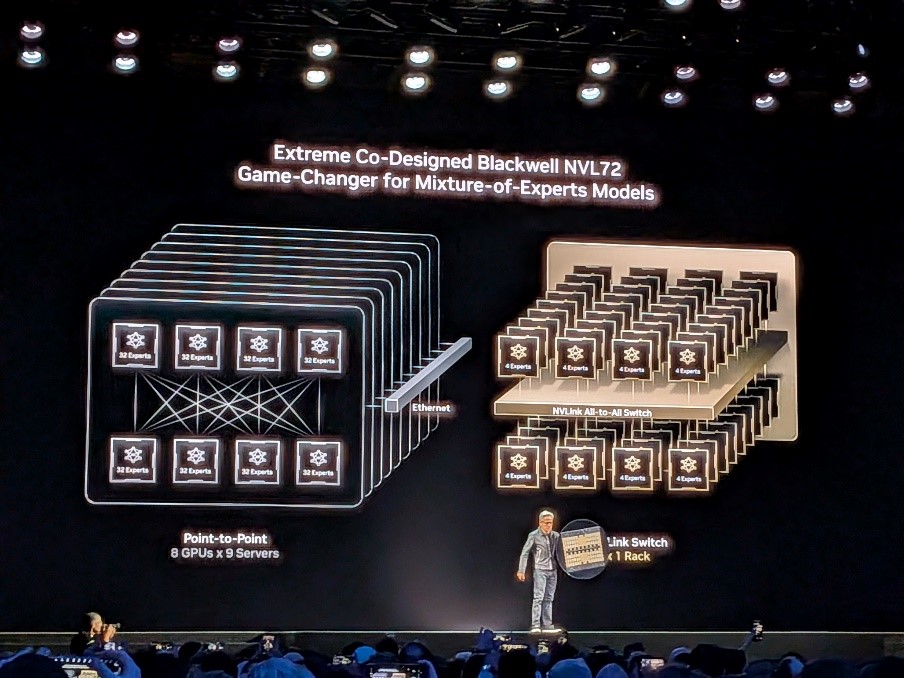

- Huang also introduced the NVL72 – a specific, high-density, rack-scale system architecture built by NVIDIA for extreme AI and supercomputing workloads. These overcome the limits of traditional servers by tightly integrating multiple processors and will allow them to handle multi-trillion-parameter LLMs more efficiently.

- Building cybersecurity agents using the NVIDIA NeMo software suite is another critical use case. Crowdstrike is developing highly capable virtual cyber employees, or “deep agents,” to automate and enhance security operations using this suite.

- Nvidia's IGX Thor Robotics Processor, is tailored for industrial and medical applications to enable real-time artificial intelligence capabilities in a variety of physical devices, including robots, medical equipment, factories, and machinery.

Image: Nvidia Industry analyst team

Our Take

Nvidia is currently the leading supplier of GPUs that power hyperscalers and the AI explosion, but its biggest customers are also developing their own alternatives in an effort to avoid complete vendor lock-in where a near monopoly exists. To maintain differentiation, Nvidia is expanding beyond hardware by introducing open-source AI tools in language, robotics, and biology. At GTC, the company launched open model families like Nemotron (digital AI), Cosmos (physical AI), Isaac GR00T (robotics), and Clara (biomedical AI), empowering developers to create specialized intelligent agents for practical use.

Technology leaders should have their team evaluate the open-source resources offered by Nvidia for new projects. Leaders in manufacturing and healthcare should consider the Physical AI processors for AI on the edge.

As organizations look to expand their AI capabilities, platform providers will likely present options as more companies like AMD and Broadcom develop competitive chips.