Any Model, Any Hardware, Any Cloud: Red Hat & Cisco’s Vision for Open, Flexible AI

Red Hat and Cisco's jointly developed, enterprise-grade AI platform.

During a recent briefing (October 2025) Red Hat and Cisco introduced a jointly developed, enterprise-grade AI platform that enables organizations to run generative and agentic AI securely across hybrid environments – on-prem, cloud, and edge – using open-source models and hardware-agnostic infrastructure.

Image Source: Red Hat/Cisco analyst presentation

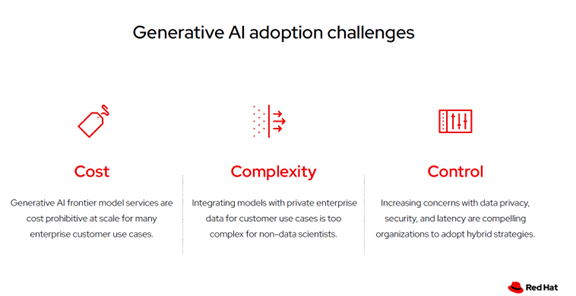

This solution effectively tackles major challenges encountered by enterprises during adoption, such as cost unpredictability, data sovereignty, and fragmented infrastructure. Through the integrated offerings of Red Hat and Cisco, organizations are empowered to run open-source models on-premises or across hybrid cloud environments, enabling technology leaders to shift from variable cloud expenditures to building strong internal capabilities.

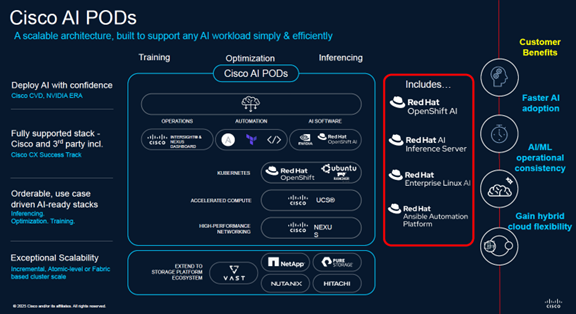

1. Full-Stack AI Infrastructure

Red Hat AI provides the software layer (OpenShift AI, Rel AI, AI Inference Server) to manage the entire AI lifecycle – model development, deployment, and monitoring.

Cisco complements this with AI Pods, a validated hardware stack optimized for compute, networking, and storage to support demanding AI workloads.

Image Source: Red Hat/Cisco analyst presentation

2. Distributed Inference Engine

Running large AI models (like LLMs) requires significant computing power. The Red Hat and Cisco platform uses a distributed inference engine to split workloads across multiple machines and GPUs, enabling faster responses and the ability to handle high volumes of requests efficiently.

3. Model as a Service (MaaS)

For organizations needing to keep data private due to compliance, security, or IP concerns, the platform allows them to run AI models on their own infrastructure. Red Hat and Cisco are making this more accessible and easier to implement without relying on external cloud providers.

4. Agentic AI Enablement

Leveraging Red Hat and Cisco’s platform featuring Llama Stack and MCP, enterprises are able to develop agentic AI smart agents and integrate them with internal tools to streamline and automate workflows.

5. Governance & Optimization

The built-in safeguards ensure that AI is used responsibly for fairness, safety, and transparency. Tools like LLM Compressor help run AI faster and reduce computing costs.

6. Model Lifecycle Management

The Red Hat and Cisco platform includes model training using the enterprise data deployment across cloud, on-prem, and edge environments. Track performance and ensure the models are reliable and compliant.

The solution is tailored for sectors such as financial services, healthcare, government, and manufacturing, as well as organizations seeking scalable, controlled, and cost-effective AI capabilities. This positioning suggests a focus on industries requiring robust compliance and efficient resource management, highlighting potential advantages in flexibility and operational oversight.

Our Take

CIOs and CTOs have faced the challenge of building costly, complex AI platforms in-house or relying on cloud services that limit control and increase costs. Red Hat and Cisco now offer a third option: a scalable, secure AI platform that lets enterprises adopt AI at scale while retaining control of their data, infrastructure, and costs. This makes them valuable partners for organizations prioritizing governance, flexibility, and sovereignty.

The Red Hat and Cisco AI platform provides valuable benefits, but CIOs and CTOs should note potential challenges. Integrating with current systems takes effort, managing AI workloads needs skilled staff, and upfront infrastructure costs can be high. Clear governance is essential to use safeguards effectively. Shifting to the hybrid full-stack model may require changes in culture and operations, and some dependencies on specific tools or hardware could remain despite the platform’s open design.

There are other players in this market. At the recent Nvidia GTC event, HPE introduced an expanded NVIDIA AI Computing by HPE portfolio aimed at simplifying AI deployment and scaling for governments, regulated industries, and businesses. There is also a fast-growing trend toward “sovereign AI factories,” driven by increasing demand from both public and private sector clients.